How to Choose the Best Automotive Camera for Outdoor AMR and Unmanned Vehicles?

Outdoor autonomous vehicles, such as AMRs (Autonomous Mobile Robots) and UGVs (Unmanned Ground Vehicles), operate in harsh and dynamic environments where reliable vision is crucial for safe navigation and real-time decision-making. Selecting the right camera specifications involves more than simply choosing a sensor with high resolution. It requires a systematic evaluation of interface technology, environmental durability, optical quality, and processing requirements to ensure that the perception system meets the performance demands of outdoor autonomy.

This article outlines the seven key factors engineers should consider when selecting cameras for outdoor autonomous vehicles.

1. Interface

The camera interface determines how image data is transmitted to the processing unit, directly affecting latency, bandwidth, and system robustness. For outdoor autonomous vehicles, two commonly used interfaces are GMSL™ and Ethernet.

◆ GMSL™ (Gigabit Multimedia Serial Link):

GMSL™ supports long-distance transmission (up to 15 meters) over a single coaxial or shielded twisted-pair (STP) cable. It is designed to handle harsh electromagnetic environments, providing strong EMI immunity, stable data transmission, and ultra-low latency, which are essential for real-time perception and obstacle avoidance. Additionally, GMSL™ supports Power-over-Coax (PoC), simplifying cabling and reducing system complexity.

◆ Ethernet:

Ethernet offers standardized networking and scalability but typically has higher latency and requires compression for video transmission. This makes it better suited for non-time-critical sensor fusion rather than real-time vision tasks.

For robust, low-latency vision in outdoor autonomous vehicles, GMSL™ is generally the preferred interface.

2. Field of View (FOV) and Resolution

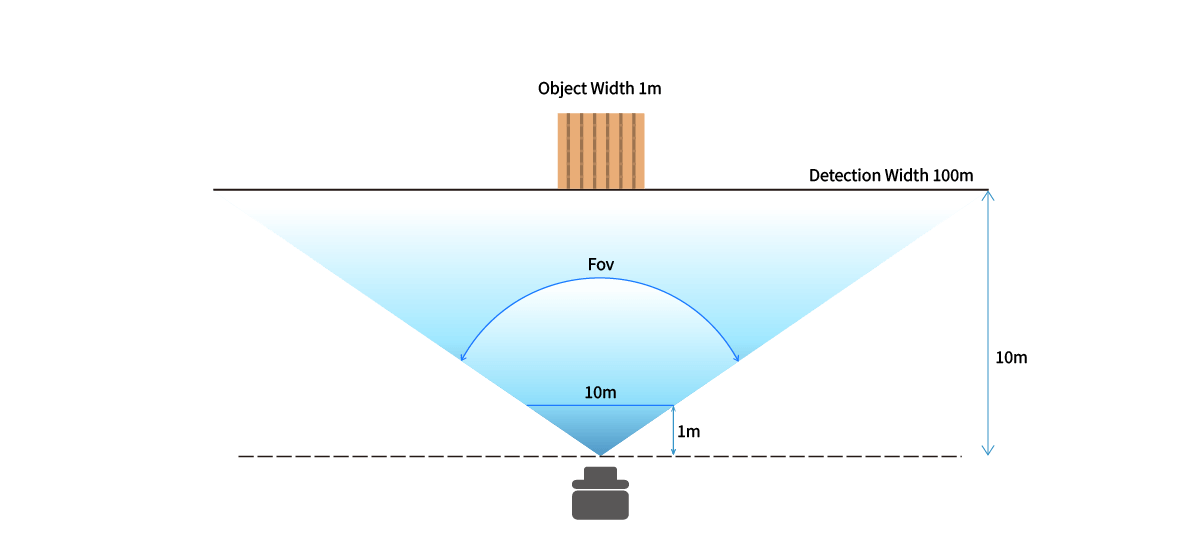

Determining how far and how wide an outdoor autonomous vehicle needs to “see” depends on its operating speed, the required control or reaction time, and the size of objects it must detect. These factors directly define the camera’s field of view (FOV) and resolution requirements.

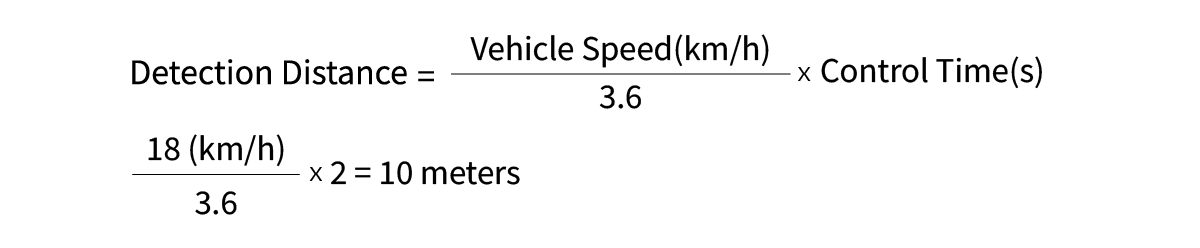

◆ Minimum Detection Distance:

For example, at a maximum speed of 18 km/h with a 2-second reaction time, the vehicle must detect objects at least 10 meters ahead.

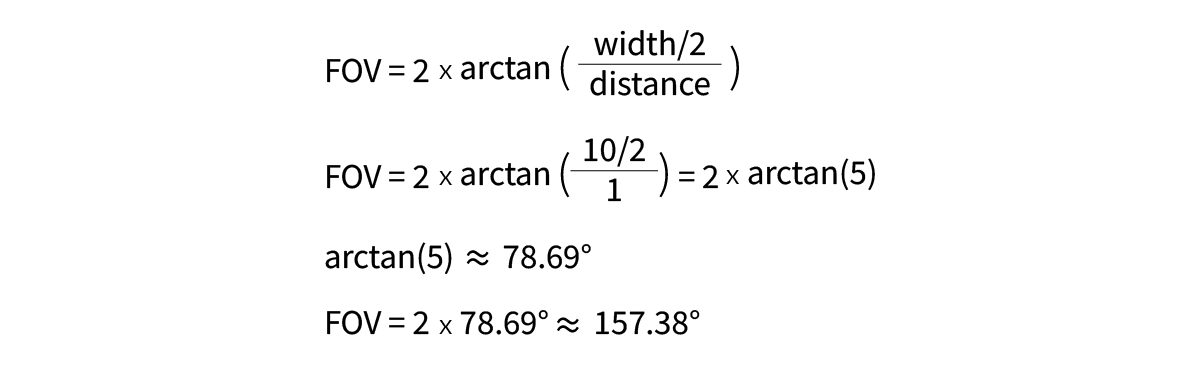

◆ Field of View (FOV):

If the closest detection distance from the camera is 1 meter and the required coverage width at that distance is 10 meters, then the horizontal Field of View (FOV) can be calculated as:

Therefore, the camera must have at least a 157.4° horizontal FOV to capture a 10-meter-wide area at a 1-meter distance.

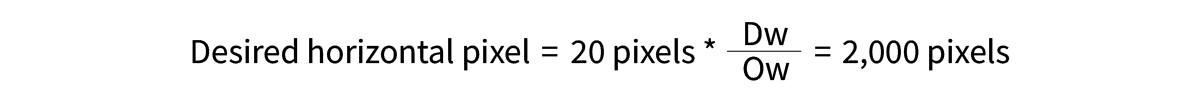

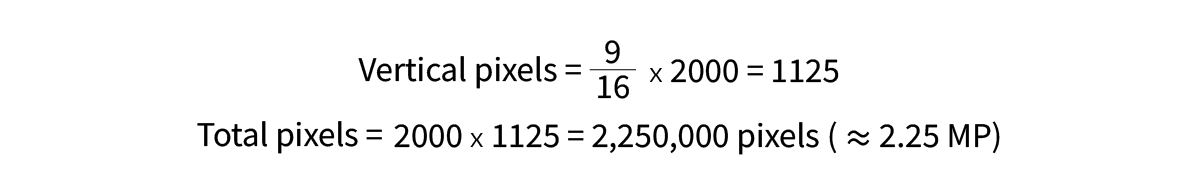

◆ Resolution:

Based on the FOV at minimum detection distance (10 meters away), the detection width (Dw) would be 100 meters, and if we need to detect an object that is 1 meter wide (Ow) with 20 pixels as the desired bare minimum on the image sensor, then the desired horizontal number of pixels required across the image can be calculated as:

If the camera uses a 16:9 aspect ratio image sensor:

In this example, a camera with at least 2.25 megapixels is required to meet the detection requirements.

Figure. Illustration for FOV and Resolution calculation

By defining the maximum speed, control time, minimum detection distance, and object size, engineers can accurately determine the camera’s FOV and resolution. This ensures that the vision system is optimized for safe navigation and precise obstacle detection in outdoor environments. Of course, choosing a higher resolution camera can buy more time for longer detection distance and smoother vehicle control.

oToBrite offers a wide range of camera resolutions, FOV configurations, and interface options to comprehensively address the diverse needs of autonomous driving applications. Explore our complete portfolio in the Camera Selector.

3. HDR and LED Flicker Mitigation (LFM)

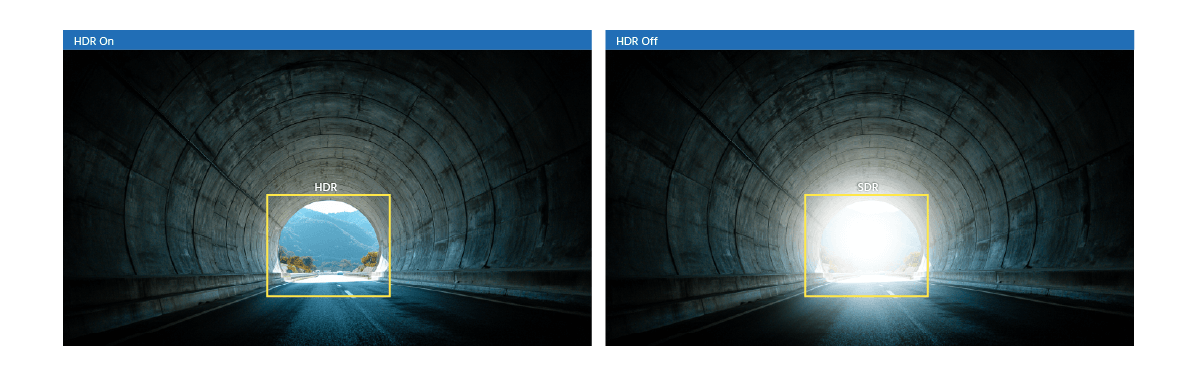

Outdoor environments expose cameras to highly variable lighting conditions, including bright sunlight, shadows, tunnels, and LED-based traffic lights. To ensure reliable perception in these scenarios, two critical camera features are High Dynamic Range (HDR) and LED Flicker Mitigation (LFM).

◆ HDR (High Dynamic Range):

HDR enables cameras to capture details in both bright and dark areas within the same frame. For outdoor autonomous vehicles, at least 120 dB HDR helps reduce overexposure and underexposure, ensuring clear image data for AI detection even under direct sunlight or in shaded conditions.

Figure. HDR ensures clear, balanced images for AI detection.

◆ LFM (LED Flicker Mitigation):

Many outdoor environments contain LED-based traffic lights, signage, and vehicle indicators. LFM ensures stable illumination rendering, allowing the perception system to correctly recognize traffic signals, warning lights, and other LED-based visual cues.

Figure. LFM ensures accurate recognition of traffic signals and warning indicators.

Cameras equipped with HDR and LFM not only improve image quality but also enhance the reliability of AI perception, especially in complex outdoor conditions where lighting can change abruptly or contain high-frequency LED sources.

4. Automotive-Grade Camera

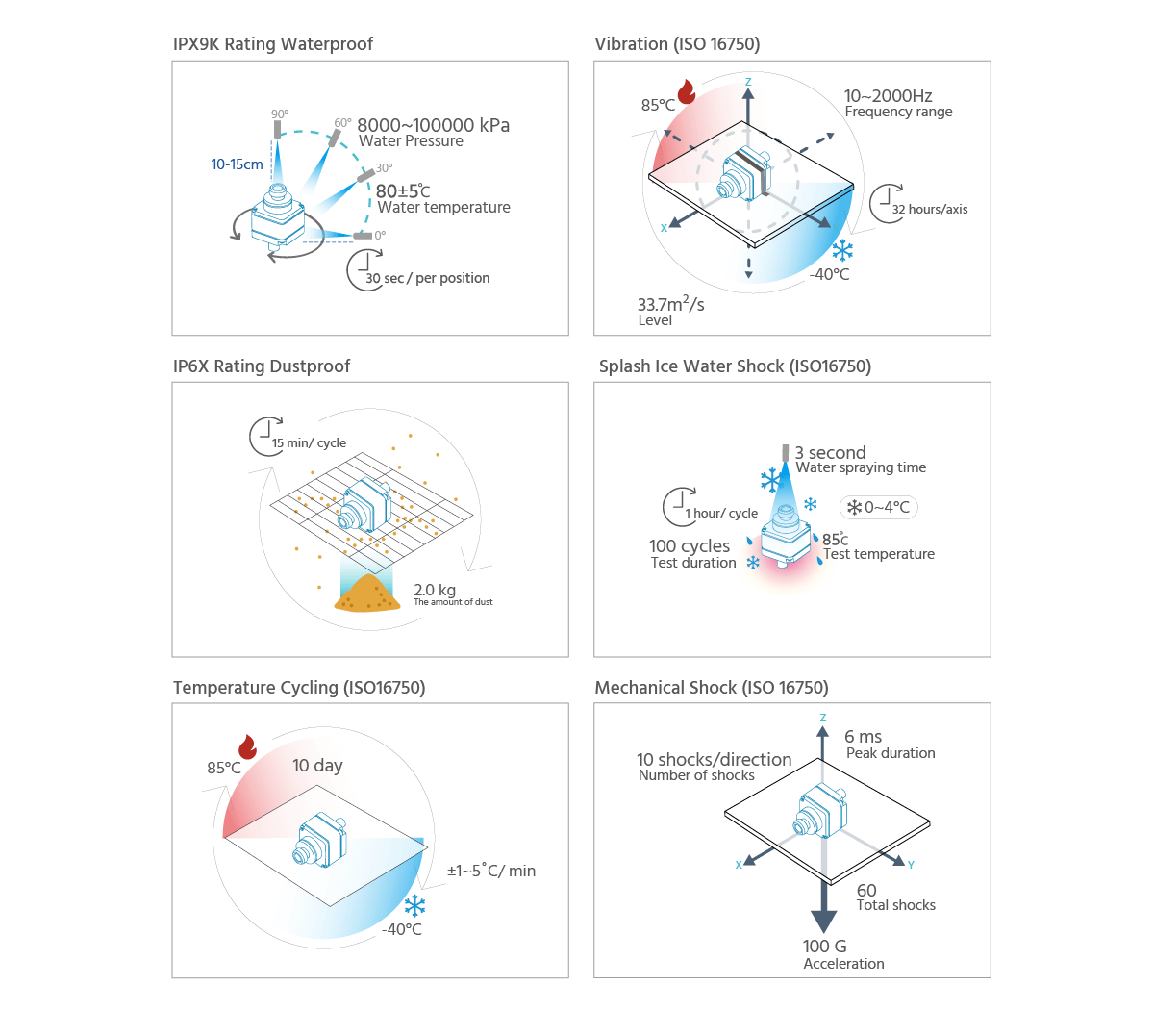

Outdoor autonomous vehicles are often deployed in environments where temperatures can vary drastically, from extreme heat during summer to freezing conditions in winter. To ensure stable performance under such conditions, it is crucial to choose cameras equipped with automotive-grade image sensors that meet stringent automotive standards such as AEC-Q100 for sensor reliability and ISO 16750 for environmental durability testing. These cameras must withstand wide operating temperature ranges, typically from -40°C to +85°C, and offer the robustness required for continuous use in harsh outdoor conditions.

5. Reliability

In real-world outdoor operations, cameras face constant exposure to vibration, rain, dust, mud, and rapid temperature changes, any of which can degrade image quality or even lead to device failure. To ensure long-term reliability, camera solutions must be tested and proven to meet the following standards:

- Vibration and shock resistance (ISO 16750)

- Ingress protection (IP67/IP69K) for dust and water resistance

Compliance with these tests validates the camera’s ability to endure harsh conditions, maintain consistent image quality, and reduce downtime in mission-critical outdoor autonomous vehicle applications.

Figure. oToBrite’s cameras meet automotive-grade reliability standards through rigorous testing

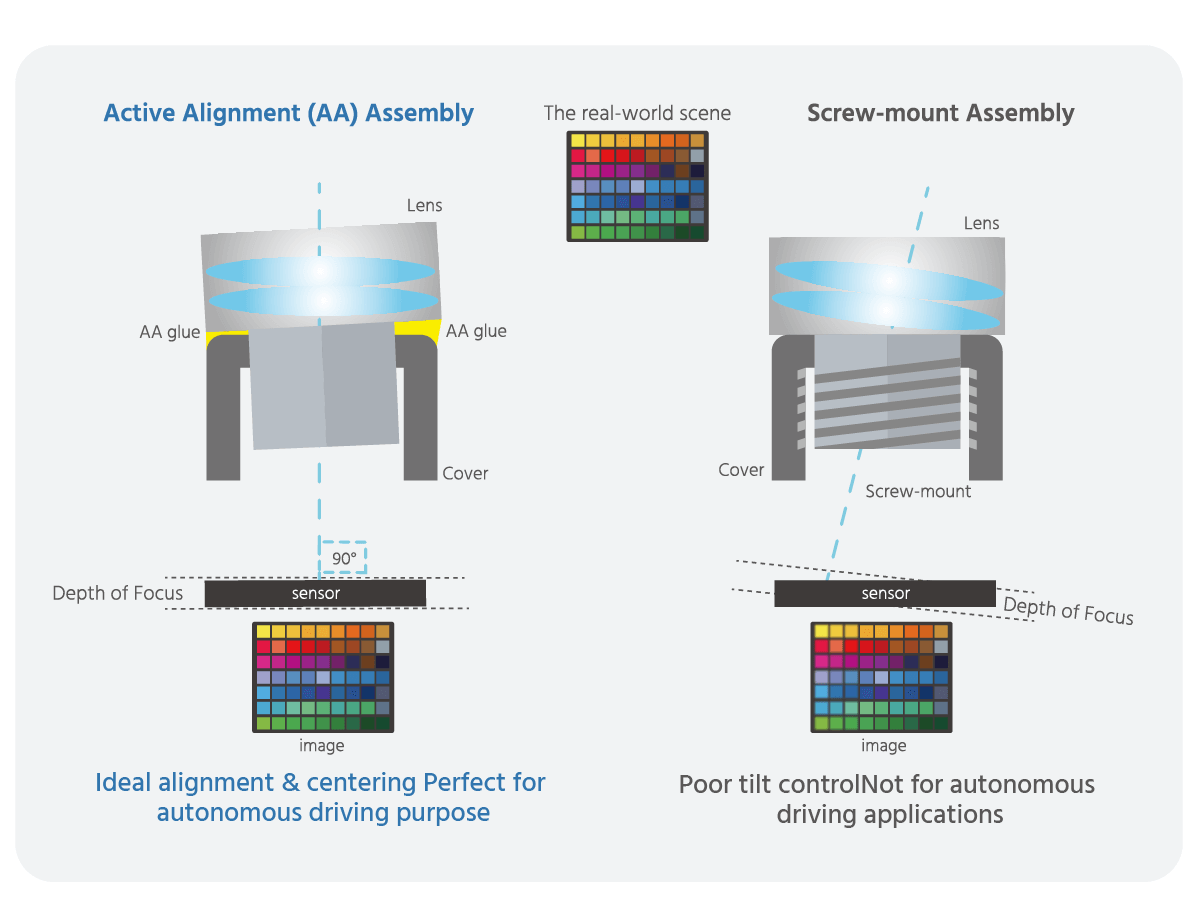

6. Active Alignment (AA) Assembly

For outdoor autonomous vehicles, camera image clarity is critical to ensure that every pixel contributes to accurate perception and reliable AI detection. Active Alignment (AA) is a precision camera module assembly process that aligns the lens and image sensor at the pixel level during manufacturing.

Unlike traditional mechanical alignment, such as C-mount or S-mount widely used in industrial cameras, AA uses real-time optical feedback to achieve sub-micron accuracy in lens positioning. This process minimizes optical distortions such as blurring, chromatic aberration, or pixel misalignment, resulting in sharper images across the entire field of view.

By adopting AA assembly, camera manufacturers can deliver modules optimized for high-performance perception in harsh outdoor environments to ensure every pixel is clear, which is very critical for autonomous vehicles.

Figure. Comparison of Active Alignment assembly and Screw-mount Assembly

7. Camera Quantity and Processing Requirements

The total number of cameras on an outdoor autonomous vehicle is a critical factor that directly determines the specifications of the computing platform. More cameras mean higher image data throughput, greater bandwidth demand, and increased AI processing loads. Selecting the processing hardware must therefore align with the camera configuration from the start. Take the NVIDIA Jetson platform, used in most autonomous vehicles, as an example.

- 1–4 cameras: NVIDIA Jetson Orin™ NX / Nano

- 4-8 cameras: NVIDIA Jetson AGX Orin

- 8+ cameras: NVIDIA Jetson Thor

By determining the number of cameras early, engineers can correctly size the processing hardware and ensure sufficient bandwidth and AI performance for real-time autonomy.

Conclusion

Selecting cameras for outdoor autonomous vehicles requires a holistic approach that considers interface, FOV, HDR/LFM capabilities, automotive-grade standards, reliability, optical assembly, and processing requirements. Addressing these seven factors enables engineers to build vision systems that deliver the precision, durability, and real-time performance essential for safe and efficient autonomous operation in challenging environments.

oToBrite offers a wide range of GMSL™ cameras featuring sensor options such as SONY IMX390, IMX490, IMX623, IMX728, ISX021, ISX031, onsemi AR0823, with FOV choices from 30° to 195°, meeting the needs of diverse autonomous driving applications.

Key Features:

- Support edge AI computing modules, including NVIDIA Jetson AGX Orin™, Orin™ NX, Orin™ Nano, and Intel® Core™ Ultra 7/5 Processors, etc.

- Fully compatible with leading IPC platforms, including Advantech, ADLINK, AVerMedia, Axiomtek, Neousys, SINTRONES, and Vecow, ensuring reliable deployment in edge AI and industrial applications.

- Adopted by several automotive OEMs, including Daimler Truck, Toyota, and Xpeng, with over 1.2 million automotive cameras shipped to date.

- Passed 20+ automotive-grade tests, even in -40°C~85°C or 100G shock.

- Rigorously tested to meet IP67 and IP69K standards, ensuring excellent dust and water resistance.

- In-house 5/6-axis active alignment (AA) assembly delivers sharp and reliable images with pixel-level precision compared to screw-mount assembly.

- HDR and LED flicker mitigation deliver clear, consistent images in low-light, high-contrast, and dynamic lighting conditions.

For more details on camera solutions purpose-built for AMRs and UGVs, please visit oToBrite Robotics Cameras.

What is High Dynamic Range? Why is It Important for Automotive Cameras?

Understanding UN Vehicle Safety Regulations & How Sensors Help Vehicles Comply