Parking Assist Evolution: From Back-up Alarm to Vision-AI Autonomous Parking

Parking has always been one of the trickiest tasks for drivers. From the early days of back-up alarms and simple beeping sensors to today’s camera-based AI systems capable of fully autonomous valet parking, the evolution of Parking Assist represents one of the most visible milestones in the development of advanced driver assistance systems (ADAS).

The Beginning: Sonar-Based Back-up Alarm Systems

Sonar (ultrasonic sensors) first appeared in vehicles in the late 1980s to early 1990s as back-up alarm systems, typically with two to four sensors mounted on the rear bumper. These sensors use ultrasonic waves to detect nearby obstacles. Ultrasonic transducers emit high-frequency sound waves that bounce back upon hitting an object. By measuring the time, it takes for the waves to return, the system calculates the distance to the obstacle. This information is conveyed through audible beeps or visual alerts on the driver interface, aiding in collision avoidance during parking or reversing.

In reverse parking and low-speed maneuvering, sonar sensors provide graduated warnings—faster beeps or stronger visual indicators as the vehicle nears an object. In advanced systems, some sonar-based setups can automatically apply brakes to prevent collisions, enhancing safety.

Ultrasonic Sensor Limitations and the Shift to Vision-Based Parking Systems

Ultrasonic sensors, foundational to early back-up alarm and parking assist systems, face several technical limitations that have driven the adoption of vision-based alternatives. The main limitations of ultrasonic sensors include:

- Limited detection range: Effective only within 0.2 to 3 meters, suitable solely for low-speed parking scenarios.

- Environmental interference: Rain, snow, temperature variations, and ambient noise can distort ultrasonic waves, leading to inaccurate distance measurements.

- Lack of object classification: These sensors measure distance but cannot identify object types (e.g., pedestrians, vehicles, or walls) or detect movement, limiting situational awareness.

To overcome these constraints, the automotive industry has shifted toward vision-based systems. oToBrite’s high-performance Rear Cameras and Surround Cameras provide clear, real-time images of the vehicle's surroundings, enhancing visibility and enabling detection of pedestrians, vehicles, curbs, and other obstacles. These systems offer multi-angle views and image-based alerts, improving safety and driver confidence during parking.

Evolving safety regulations have further accelerated this transition. In the U.S., FMVSS 111 mandates rearview cameras in all passenger vehicles to ensure clear rear visibility and prevent collisions, particularly with pedestrians or children. In Europe, ECE R46 governs indirect vision devices (mirrors, cameras, etc.), while ECE R158 extends requirements to back-up alarm and parking assist systems. These standards underscore the growing reliance on camera-centric solutions, such as oToBrite’s advanced camera systems, to meet modern safety demands.

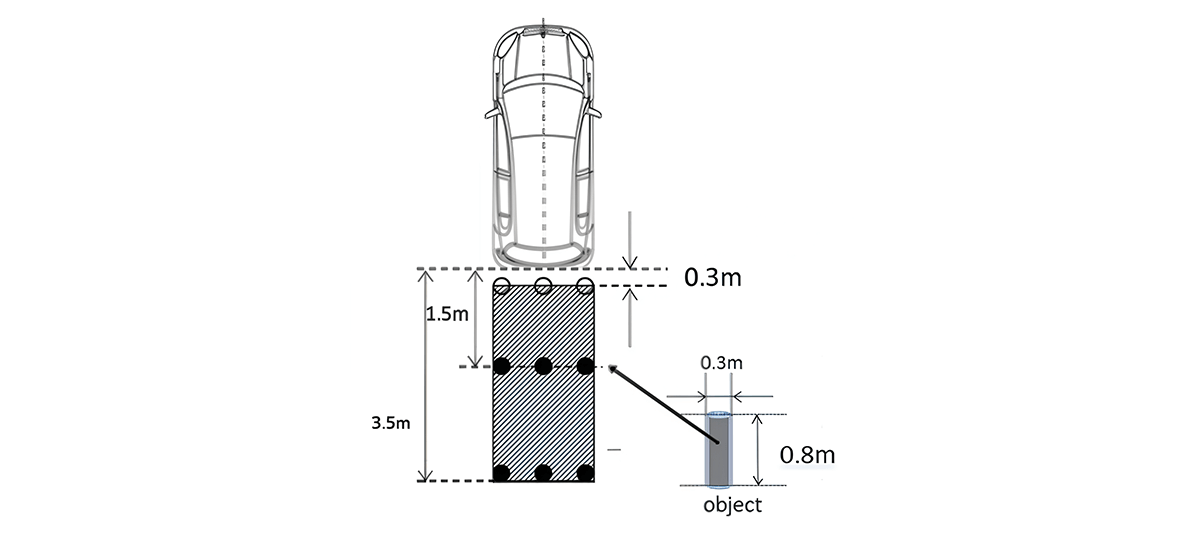

Figure 1: UN R158 - Close-proximity rear-view field of vision

Sonar-Based Automatic Parking Systems

Parking in crowded urban areas with tight spaces can be challenging. To address this, the first automatic parking systems, introduced in the mid-2000s, used 8 to 12 ultrasonic sensors on the front and rear bumpers to detect available spaces and guide steering automatically. These sensors measure distances to nearby obstacles by emitting and receiving ultrasonic waves, reducing driver workload.

However, sonar-based systems faced limitations, including low angular resolution, a short detection range (typically 0.2 to 3 meters), and susceptibility to environmental interference like rain or noise. They also couldn’t detect parking lines or identify object types, resulting in inconsistent performance and limited driver adoption. These constraints drove the industry toward camera-and AI-based vision systems, which offer higher accuracy, parking line recognition, and greater reliability for advanced parking assist technologies.

From Sonar to Vision-AI: The Rise of Auto Parking Assist (APA)

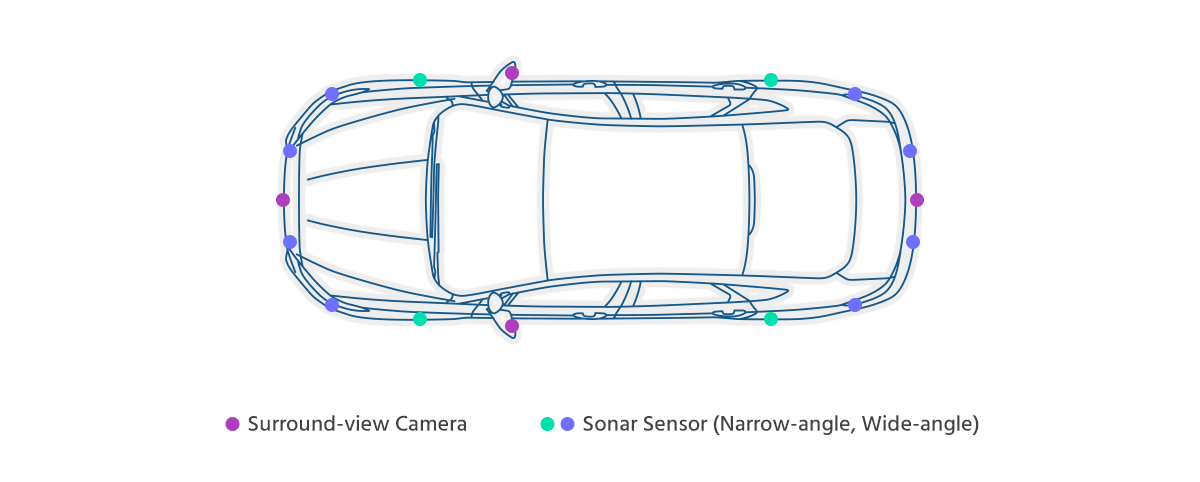

By 2018, the limitations of sonar-only parking systems had paved the way for a new generation of vision-based Auto Parking Assist (APA) technologies. Combining surround-view cameras with ultrasonic sensors, these systems provide real-time visual context, parking line detection, and precise obstacle recognition. AI-based image processing enhances detection accuracy and decision-making, achieving success rates above 90% in automated parking maneuvers.

oToBrite’s Vision-AI APA system exemplifies this progress. Equipped with four surround-view cameras and a dedicated ECU, it detects low obstacles, locates parking spaces, reads parking numbers, and identifies road users. Its proprietary Vision-AI models ensure reliable performance in challenging conditions like low light or high glare, delivering safe and efficient parking.

This evolution sets the stage for Automated Valet Parking (AVP), where vehicles autonomously navigate parking facilities using Vision-AI and SLAM technologies without driver supervision.

Figure 2: Deployment locations of surround-view cameras and sonar sensors

Overcoming Key Challenges in Vision-AI APA Systems

To ensure versatility, safety, and precision across diverse parking environments, oToBrite’s Vision-AI Auto Parking Assist (APA) System addresses several critical challenges:

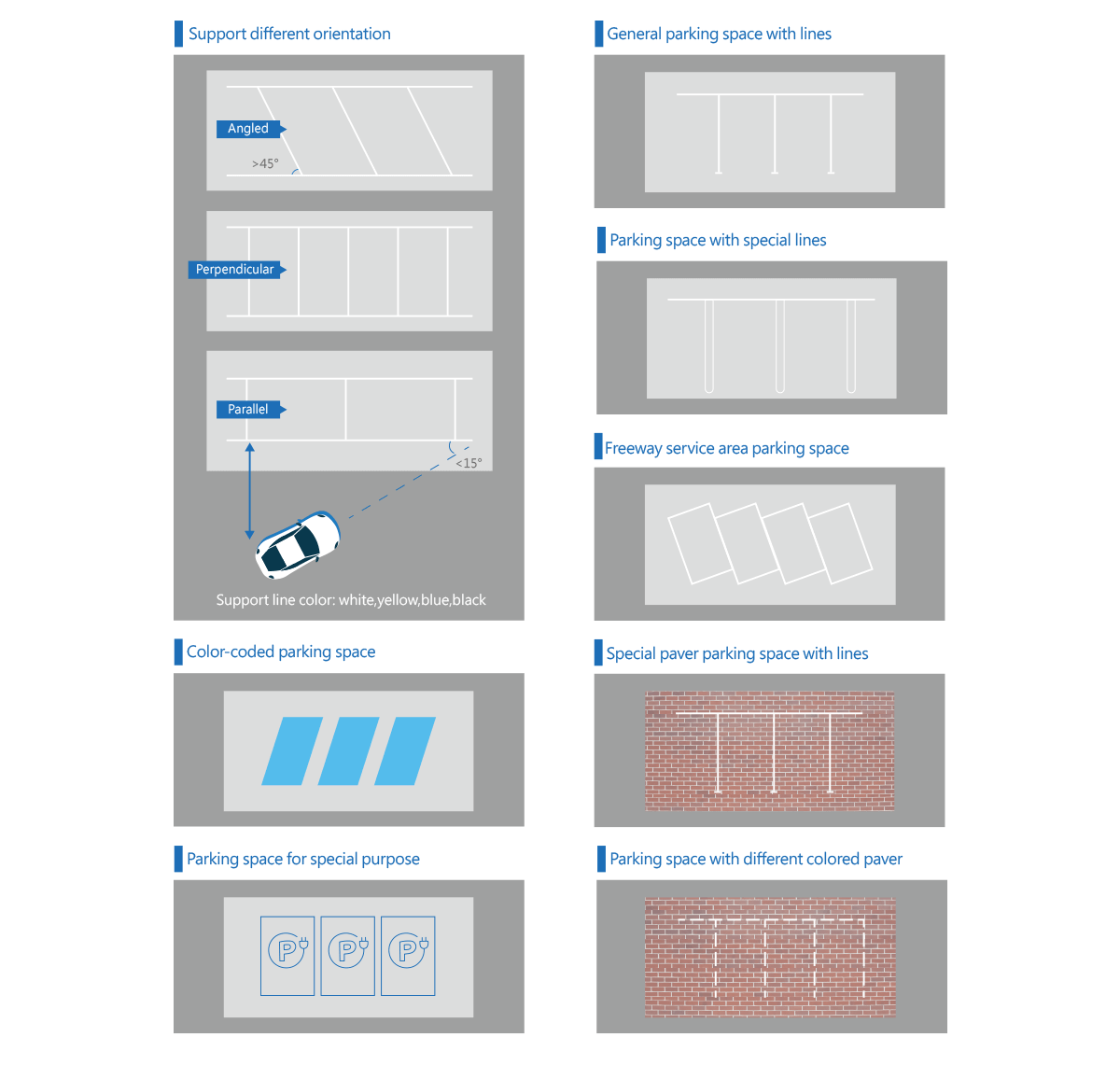

◆ Diverse Parking Lot Configurations

oToBrite’s APA System recognizes over 100 parking space types, including closed single lines, double arcs, T-shaped lines, and orientations like perpendicular, parallel, or 45-degree angled spaces. It adapts to various surface materials (concrete, asphalt, poly, epoxy, and bricks) and specialized spots (accessible, family, and EV charging) for broad compatibility.

Figure 3. oToBrite’s Vision-AI APA system supports over 100 parking space types with diverse layouts and surfaces.

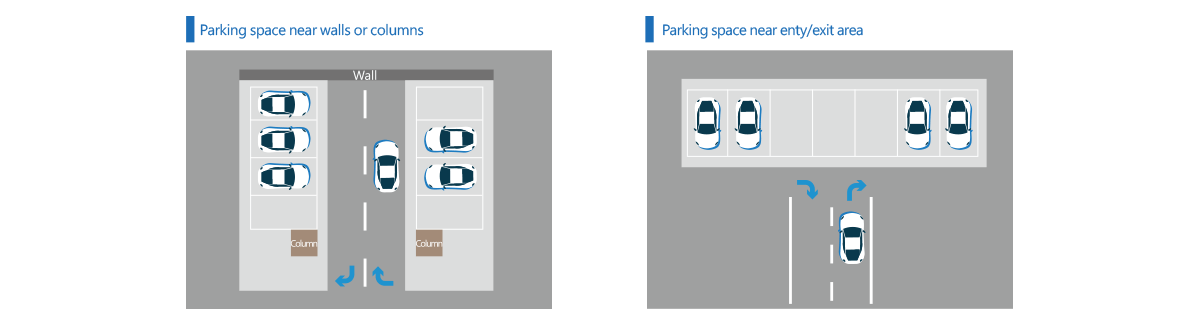

◆ Dead-end Parking Space Detection

oToBrite’s APA System excels in confined spaces near walls, pillars, or entry/exit zones, achieving positioning accuracy of less than 10 cm for precise and safe maneuvering in tight environments.

Figure 4. Full coverage with dead-end parking space detection

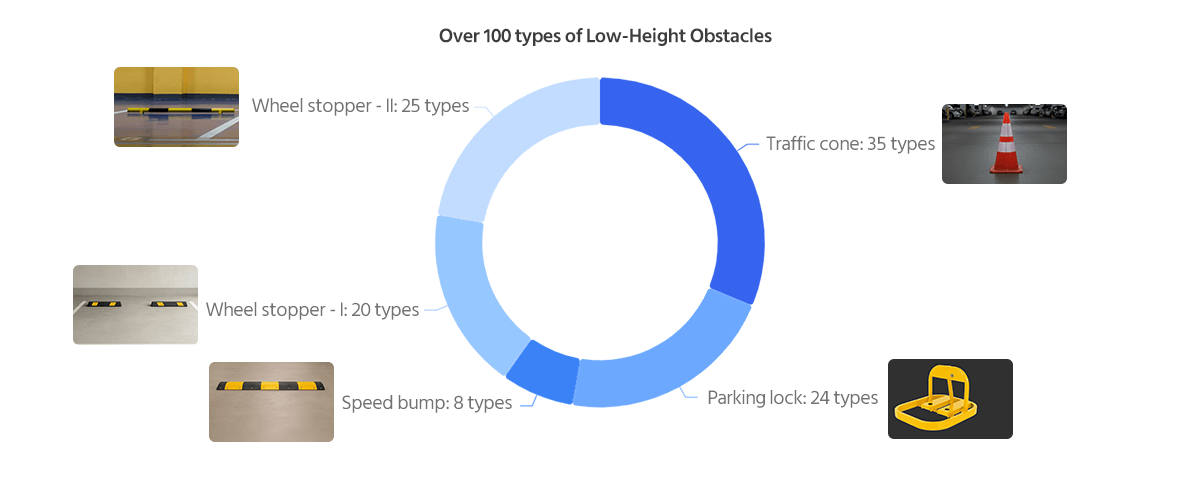

◆ Low-Height Obstacle Classification

To enhance safety, the system detects and classifies low-height objects like traffic cones, wheel stoppers, parking locks, speed bumps, and curbs, accurately calculating the vehicle’s drivable space to prevent collisions.

Figure 5. The system classifies over 100 types of low-height obstacles, including traffic cones, wheel stoppers, parking locks, and speed bumps.

By overcoming these challenges, oToBrite’s Vision-AI APA System delivers reliable and safe automated parking, paving the way for fully autonomous solutions like Automated Valet Parking (AVP).

From Assisted Parking to Full Autonomy: The Evolution Toward Automated Valet Parking (AVP)

The progression from Auto Parking Assist (APA) ultimately to autonomous parking/Automated Valet Parking (AVP) is a vital advancement for autonomous vehicles. APA automates steering, acceleration, and braking during parking under driver supervision, while AVP eliminates the need for any driver presence. After the driver exits at a drop-off point, the vehicle independently navigates the parking facility, locates an available space, parks, and returns to the pick-up area on command.

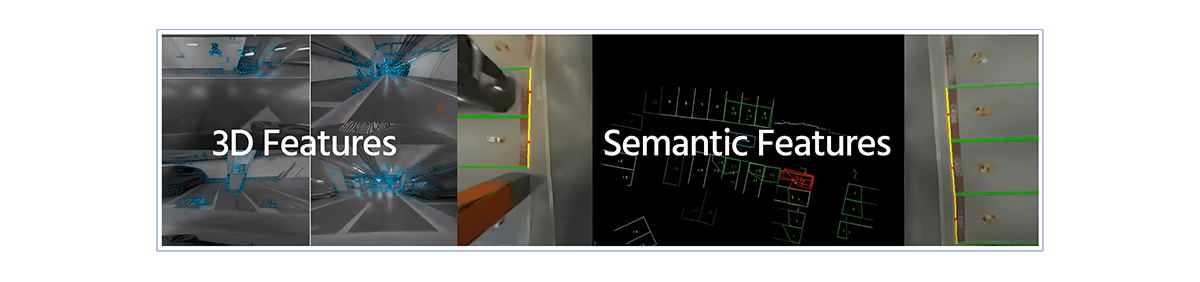

AVP achieves this through Vision-AI for environmental perception and SLAM (Simultaneous Localization and Mapping) for precise positioning and navigation. Combined with VSLAM technology and a single front camera, these enable a seamless upgrade from APA to AVP, turning parking into a scalable, self-guided operation in complex, dynamic settings.

oToBrite’s oToParking system, now in mass production, exemplifies this shift. Its self-developed VSLAM generates HD-map-free parking lot maps, allowing the vehicle to perceive spaces and self-localize with under 20 cm accuracy. The system memorizes up to 1 km of routes, supports autonomous circling and obstacle avoidance until securing a spot, and includes mobile summon for retrieval to user-specified locations.

Figure 6. oToBrite’s SLAM using multi-camera vision-AI technology with semantic and 3D features, semantic features can cover various road markings as well as objects like vehicles, pillars, walls, curbs, wheel stoppers, etc.

Key Features of oToBrite’s Automated Valet Parking (AVP)

- 1 km Route Memorization: Captures parking paths without lot reconstruction or external infrastructure.

- VSLAM Fusion: Integrates non-semantic and semantic mapping for seamless indoor/outdoor operation.

- Autonomous Navigation and Circling: Enables dynamic searching and parking in available spaces with real-time obstacle avoidance.

- One-Time Mapping: Powers both valet parking and summon functions with reliable, repeatable performance.

Learn more about oToParking - APA + AVP: https://www.otobrite.com/product/otoparking-apa-avp